[Note on Brand Evolution] This post discusses concepts and methodologies initially developed under the scientific rigor of Shaolin Data Science. All services and executive engagements are now delivered exclusively by Shaolin Data Services, ensuring strategic clarity and commercial application.

A trend to highlight is observing the relationship between learning and big data analytics. The corresponding technology is artificial intelligence and its related tools. Using vast quantities of data to improve student learning experiences is a trend to observe as artificially intelligent tools increase in ubiquity. While the performance of existing implementations is contrary to expectations, this non-negative return on investment stems from barriers such as student privacy and equity, faculty buy-in, and data reporting staff or resources (Pelletier et al., 2022). Data reporting staff and resources are fluid in every industry. Like any other job, the existence and appearance of such professionals are market-driven.

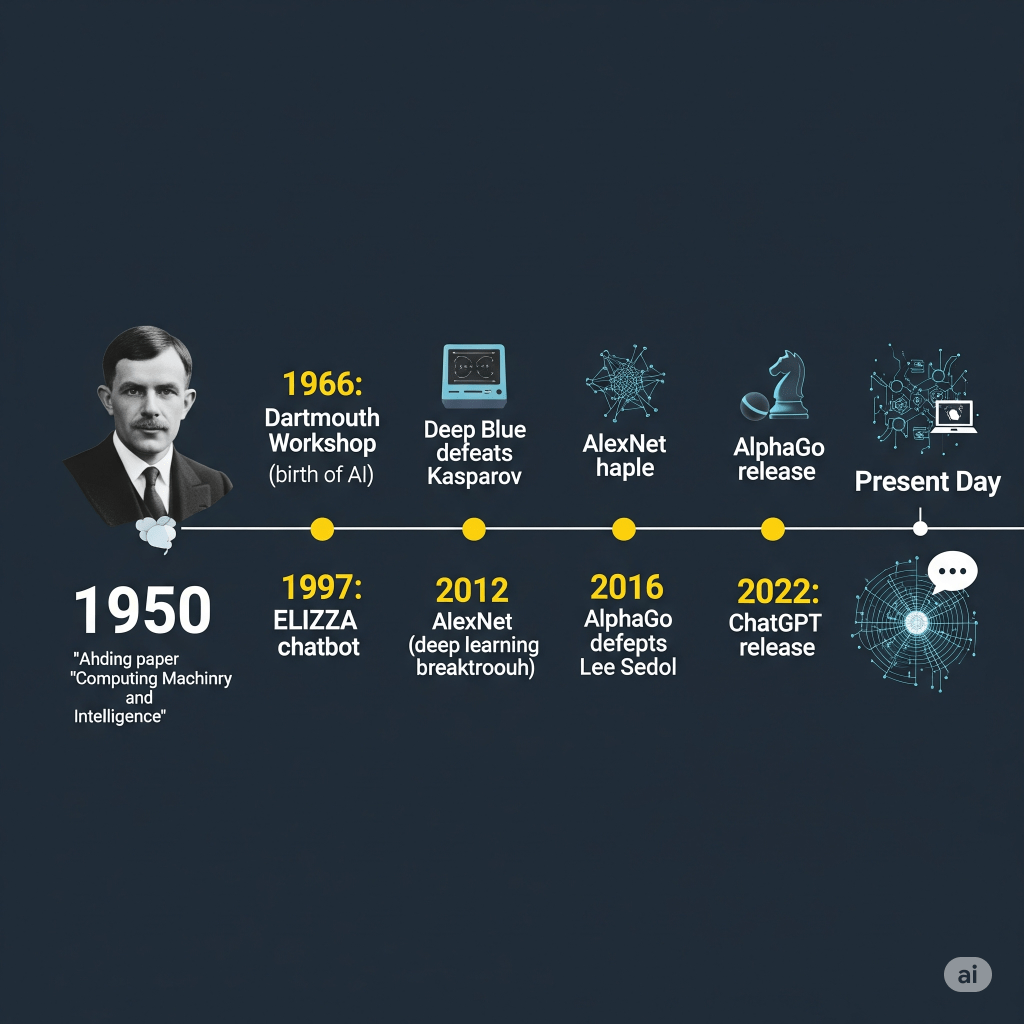

However, barriers such as student privacy will necessarily diminish as society becomes more data-centric. Specifically, as artificially generative models and institutions establish standards of acceptable behavior for artificially intelligent tool usage, student data privacy, and equity will naturally fall into place. The reasoning behind such an argument is that students must provide their data to companies to use these tools. Moreover, as consumers, students provide their data for various other aspects of daily life. Then, it becomes a little backward not to provide data to an institution aiming at empowering their futures. As Figure 1 illustrates, the implicit understanding of each outstanding development illustrates the relationship between the willingness of societies to provide their data to private companies and the acceptance of data collection. Before commercialization, the public would be wary of arbitrarily submitting their data to academic institutions. Then, the argument presented with this conceptual juxtaposition is the underlying acceptance and understanding necessary for technological advancement. Therefore, it is a matter of shifting the argument toward the ethics behind each data application’s use case.

Figure 1

AI Through the Years.

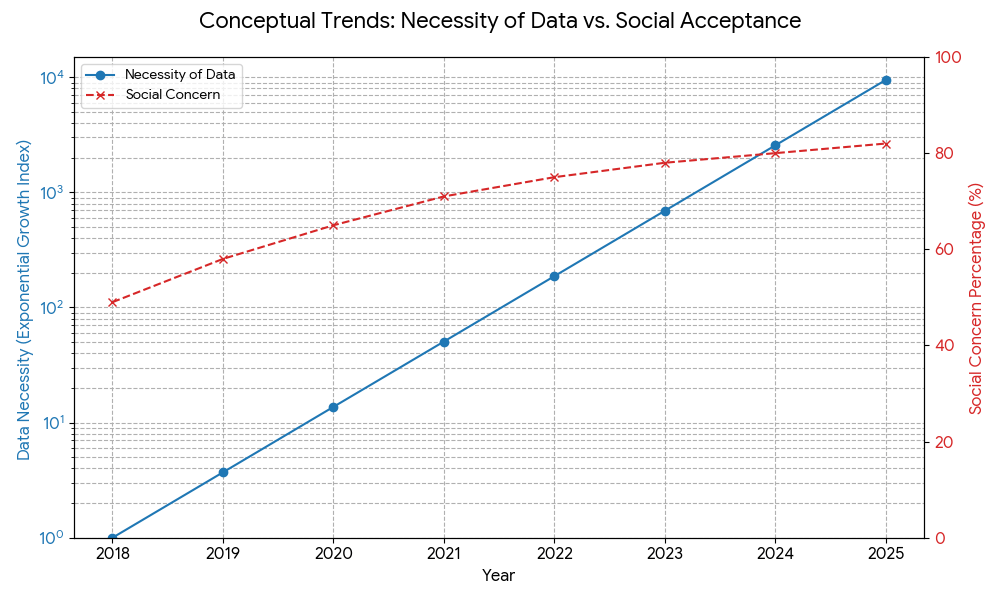

Then, an arguable critical facet of faculty investment stems from distinguishing elements that define student integrity from tool-generated material. This factor also depends on the development of these tools in a public and commercial context. Specifically, the general public needs to be more comfortable dealing with new technologies. Then, generative AI only exacerbates this discomfort. Therefore, the same private companies that develop and release generative AI tools are also releasing tools to determine material generation (Kirkchner et al., 2023). Thus, as humans have done with all previous tools, beginning with the stick and progressing through mobile devices, these generative tools will be integrated into mundane aspects of life. The not-too-distant future may have more anthropomorphic robotic assistants in the same way many people now use Google, Alexa, and Siri as countertop devices. This conceptual illustration is further expanded upon in Figure 2.

Figure 2

The Shifting Landscape: Data Collection’s Necessity vs. Social Acceptance Over Time.

Some of the forces that can impede the progress of this trend and the development of this technology are social acceptance of generative AI and ethical applications of the collected data. Succinctly, data is the same as any other information or weapon; it is as helpful as its wielder. The crucial observation with this premise is that the wielder must know how to use what they have. Then this extends to tech leadership’s call on AI labs to pause training new models for six months (Nicastro, 2023). This also extends to governmental bodies like the EU, which launched regulations mandating a review before the commercial release of new AI systems (Hawley, 2022). These impediments benefit development since they provide users with an acclimation period. Specifically, such breaks and mandates give institutions time to appropriately respond to the tools’ developments.

References:

Hawley, M. (2022, May 19). Examining Marketing Impact of EU AI Act. CMSWire.Com. https://www.cmswire.com/digital-marketing/what-europes-ai-act-could-mean-for-marketers/

Hawley, M. (2023, June 28). The Complete Generative AI Timeline: History, Present and Future Outlook. CMSWire.Com. https://www.cmswire.com/digital-experience/generative-ai-timeline-9-decades-of-notable-milestones/

Kirkchner, J. H., Ahmad, L., Aaronson, S., & Leike, J. (2023, January 31). New AI classifier for indicating AI-written text. https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text/

Nicastro, D. (2023, March 29). Stop “Giant AI Experiments.” CMSWire.Com. https://www.cmswire.com/digital-experience/musk-wozniak-and-1100-others-pause-giant-ai-experiments/

Pelletier, K., McCormack, M., Reeves, J., Robert, J., & Arbino, N. (2022). 2022 EDUCAUSE Horizon Report, Teaching and Learning Edition.

Leave a comment