[Note on Brand Evolution] This post discusses concepts and methodologies initially developed under the scientific rigor of Shaolin Data Science. All services and executive engagements are now delivered exclusively by Shaolin Data Services, ensuring strategic clarity and commercial application.

In the world of data science, we often talk about algorithms, models, and machine learning. But before any of that can deliver value, you need a foundation of discipline and structure. A data project without a plan is a sword without a master. This is a lesson I learned firsthand during my Master of Science in Computer Science studies.

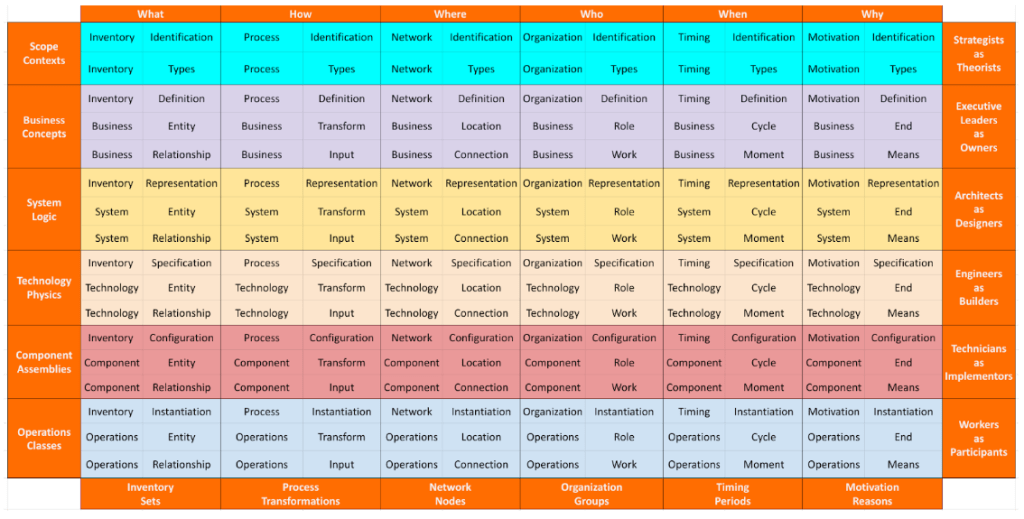

One of the most powerful blueprints for this disciplined approach is the Zachman Framework, a standard for enterprise architecture that provides a holistic view of an organization. It helped me understand that a project’s success hinges on aligning every stakeholder’s viewpoint, from the owner to the builder. But a framework on its own isn’t enough. The true value lies in its quality implementation.

In my book, “Data Science for the Modern Enterprise,” I outline the best practices for a truly rigorous, repeatable data strategy. Here are the core pillars of that methodology:

Eliminating Internal Data Silos

Data silos are a business’s greatest enemy, a widely recognized problem that introduces friction, inefficiency, and prevents organizations from obtaining a single source of truth (IBM, 2025; Databricks, 2024). My methodology emphasizes a strategic, multi-faceted approach to breaking down these silos and cultivating a unified data landscape.

As research indicates, this is not merely a technical challenge. The true value lies in addressing the cultural and organizational barriers that lead to data inconsistency and a lack of interoperability between teams (Talend, 2024; Oracle, 2024). By doing so, we ensure that every team is working from the same information, which is the essential foundation for trustworthy, high-impact insights.

Rigorously Validating the Data

Bad data leads to bad decisions. Period. This is not just an axiom of business but a principle validated by academic and industry research, which has shown that poor data quality can cost businesses 15% to 25% of their revenue (Redman, 2017).

A quality data strategy demands a methodical and disciplined approach to data validation. It means having a systematic methodology to verify the accuracy, consistency, and integrity of your data across its entire lifecycle (Eckerson, 2021). This is the rigor that separates a good insight from a catastrophic mistake.

By grounding your strategy in this process, you gain a quantifiable advantage. Businesses that prioritize a disciplined data validation process are more likely to achieve a higher return on their data investments and gain a critical competitive edge (IBM, 2023).

Standardizing Data Structures and Formats

Imagine every builder on a construction site using a different tape measure. That’s the chaos that ensues when data isn’t standardized, leading to significant inefficiency and an inability to compare information across the organization (SolveXia, 2024).

My methodology prioritizes creating and enforcing standards for data structures and formats as a critical component of data governance (Simplilearn, 2025). This ensures “data interoperability,” the ability for all systems to seamlessly exchange and understand information, and is the foundation for creating repeatable, trustworthy results (NCBI, 2019). This disciplined approach streamlines your analytics and provides a unified view of your business, which is essential for collaborative and effective decision-making (FacilityONE, 2025).

Planning for the Future

A data project is not a one-time event. A quality implementation is a forward-looking one. It requires building a system that can adapt to new challenges, integrate new data sources, and scale as the business grows. This long-term thinking is the true sign of a mastered data strategy.

These principles, inspired by my academic journey, are the “Tiger Claws” of my methodology. They are the disciplined movements that ensure every action is precise and every result is impactful. To dive deeper into these frameworks and master the art of data-driven proposals, my book is the definitive guide.

References

Databricks. (2024). The State of Data and AI. Databricks.

Eckerson, W. (2021). Data Quality Management Best Practices. Eckerson Group.

Egnyte. (2022). Data Silos: A Business’s Worst Enemy. Egnyte.

FacilityONE. (2025). Why is Data Standardization Important in FM? FacilityONE.

IBM. (2023). The Business Value of Data Quality. IBM.

IBM. (2025). What Are Data Silos? IBM.

NCBI. (2019). Data Interoperability. National Center for Biotechnology Information.

Oracle. (2024). What is a Data Silo? Oracle.

Redman, T. C. (2017). Data Quality: The Field Guide. Data Quality Solutions.

Simplilearn. (2025). What is Data Standardization? Simplilearn.

SolveXia. (2024). Data Silos: Definition, Problems, and Solutions. SolveXia.

Talend. (2024). What is a Data Silo? Talend.

Leave a comment