We’re investing millions in data, but our quality is still a mess, and governance feels like constant firefighting.

If you are a C-suite executive or a VP of Data, you’ve likely said this—or heard it in a tense board meeting. You’ve hired the engineers, you’ve bought the “Single Source of Truth” platforms, and yet, the reports are still inconsistent and the “Data Mesh” you were promised feels more like a data mess.

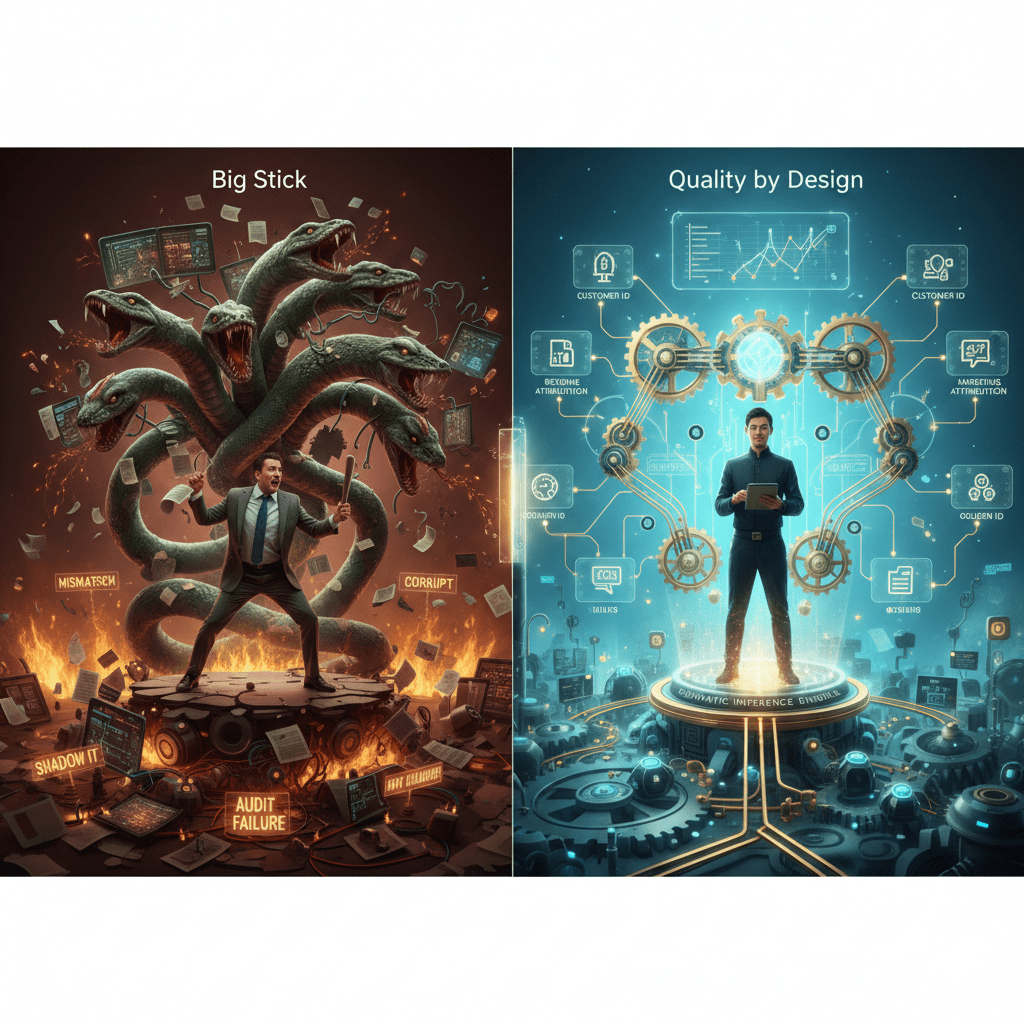

The problem isn’t your talent. It’s your Big Stick.

The Trap: Enforcement-First Governance

Most organizations treat data governance as a policing action. They build a central team, arm them with a thick book of policies, and tell them to “enforce” quality. This is the Big Stick approach. It’s reactive, it’s punitive, and it’s a relic of a legacy world where all data lived in one room.

In today’s distributed, high-velocity cloud environments, the Big Stick approach fails for three reasons:

- It Doesn’t Scale: A central team cannot possibly police every byte across a global enterprise. They become a bottleneck, not a guardian. As data volume doubles every two years, this problem only compounds.

- It Breeds Resentment: When governance is an obstacle, teams find workarounds. They build “Shadow IT” systems to get their jobs done, further fragmenting your data and fostering a culture of non-compliance.

- It’s Always Late: Enforcement happens after the damage is done. You are fixing symptoms while the root causes—the poorly designed workflows—continue to pump out “dirty” data, turning data scientists into data janitors.

The Mismatch: Modern Architecture vs. Legacy Policing

If you are moving toward a Data Mesh or Data Fabric, the Big Stick is actually your greatest enemy. You cannot have domain-driven autonomy—the core promise of Data Mesh—if a central team is micromanaging every internal process of data product creation. This “mismatched governance” is why so many digital transformations stall; you’re trying to run a decentralized architecture with a centralized, authoritarian mindset. The ambition for real-time insights and adaptable data products collapses under the weight of retroactive audits.

SCENARIO: The Quarterly Report Debacle

Internal Memo: Urgent Priority – Quarterly Earnings Report Delayed

TO: All Department Heads FROM: CFO’s Office DATE: October 26th SUBJECT: Q3 Earnings Release – Further Delay

Due to persistent inconsistencies identified between the CRM’s sales data, the ERP’s revenue figures, and the marketing department’s attribution models, the Q3 earnings release will be delayed by an additional two weeks. Our central Data Governance team has identified multiple instances of non-standardized customer IDs and conflicting revenue recognition dates across independently managed data sets. This manual reconciliation process, requiring cross-departmental validation meetings, is consuming critical resources and impacting investor confidence. We urge all teams to strictly adhere to existing data input policies.

This isn’t a hypothetical scenario for many enterprises. It’s the recurring nightmare. The “Big Stick” governance tried to enforce policies, but the underlying systems were never designed to prevent these inconsistencies. The result is millions lost in market trust and operational drag.

The Solution: Quality by Design – Architecting Inevitable Data Quality

The alternative isn’t “no governance”—it’s Quality by Design. Instead of policing data after it’s created, we must design systems where high-quality data is the natural, easy, and only outcome. It’s the difference between hiring a hundred inspectors to check for leaks and building a pipe that cannot leak in the first place.

The Pillars of a Quality-by-Design Framework:

- Proactive Standardization with Semantic Inference:

- Instead of reactive “data dictionaries” that try to document existing chaos, we define universal standards—common vocabularies, business rules, and Golden IDs—before a single line of code is written or data is ingested.

- This is where advanced concepts like Semantic Inference become critical. Instead of relying on manual tagging or fragile keyword matching, we design systems that understand the context and meaning of data from its origin. This allows for loosely coupled frameworks where data domains operate autonomously yet remain inherently interoperable. Data elements are “born” with their meaning and quality attributes baked in, reducing the need for post-creation “translation” or “correction.”

- Built-in Validation and Guardrails:

- We embed validation rules directly into data pipelines, APIs, and data product interfaces. If data doesn’t meet the defined quality standards (e.g., integrity constraints, format rules, referential consistency), it simply cannot enter the system or be published as a data product.

- This creates robust, automated guardrails. Developers and data owners know the “contract” their data must fulfill, and the system prevents deviations programmatically, eliminating subjective interpretation and manual checks.

- Empowering Autonomy Within Enterprise Boundaries:

- The goal isn’t centralization, but controlled decentralization. We empower domain teams to manage their own data products, but we equip them with the tools and non-negotiable architectural “contracts” they must meet. These contracts—governed by the proactive standards—ensure that while domains operate independently, their data inherently “speaks the same language” with the rest of the enterprise.

- This fosters a culture of ownership and responsibility, where teams are invested in the quality of their data because they are empowered to design it correctly from the start.

The Strategic Payoff: From Friction to Flow

When you move from a “Big Stick” to “Quality by Design,” governance stops being a cost center and starts being a Strategic Accelerator.

- Innovation at Speed: Your teams build faster because they aren’t waiting for “clearance” from a central authority or battling data inconsistencies. They trust the data because its quality is architecturally guaranteed.

- Reduced Risk & Enhanced Trust: Proactive quality prevents the “hallucinations” and errors that lead to bad business decisions, compliance breaches (a major concern in today’s regulatory landscape), and erosion of investor confidence. Trust in your data becomes an inherent property, not a constant struggle.

- True Scalability (Operational Leverage): Your organization can finally leverage the cloud’s power and truly realize the promise of Data Mesh and Fabric. Governance becomes a facilitator, not a constraint, allowing for exponential growth without a proportional increase in oversight costs. This is the Operational Drag Coefficient at work: for every dollar spent on reactive “Big Stick” cleanup, businesses lose far more in opportunity cost because valuable data scientists and analysts are diverted to data janitorial tasks instead of driving innovation.

- Cost Efficiency: Preventing errors upfront is always orders of magnitude cheaper than fixing them later. The total cost of ownership for your data infrastructure plummets when quality is a design principle.

How Shaolin Data Services Guides the Transformation

Transitioning from a culture of enforcement to a culture of design is a surgical operation, not a superficial upgrade. It requires an executive perspective that understands both the board room and the server room—and critically, the human element in between.

At Shaolin Data Services, we don’t just give you a “best practices” slide deck. We diagnose the root cause of your data friction and help you architect enduring solutions:

- The Data Process Friction Report ($4,000): We pinpoint the exact points where your current “Big Stick” governance is creating operational drag, stifling innovation, and wasting capital. We identify the why behind your data quality failures.

- Organizational Process Modeling (OPM): We design and implement the new, proactive governance frameworks—the strategic blueprints—that make quality the default state of your business. This isn’t just a technical exercise; it’s a cultural shift embedded in your operations.

- Fractional Chief Data Strategist (CDS): We provide the executive leadership to drive this complex cultural and architectural transformation. As your Fractional CDS, we ensure your data initiatives are treated as executive priorities, aligning your data strategy with your actual business goals.

Stop policing your data. Start designing your excellence. Embrace Quality by Design, and transform data governance from a burden into your most powerful strategic accelerator.

Leave a comment